Automating Report Archival in Microsoft Fabric - Part 2

- Ishan Deshpande

- Nov 8, 2025

- 3 min read

Updated: Nov 9, 2025

In Part 1 of this series, we explored how to automatically archive unused reports into a dedicated workspace in Microsoft Fabric to optimize cost and maintain workspace hygiene.

Now, in Part 2, we’ll take it a step further — cleaning up reports that have exceeded their retention period and removing their orphaned datasets if no other reports depend on them. This ensures that your Fabric environment stays clean, lean, and cost-efficient over time.

Now let's go through the code and see how it works, I have added link to the GitHub folder at the end, where you will find all the code.

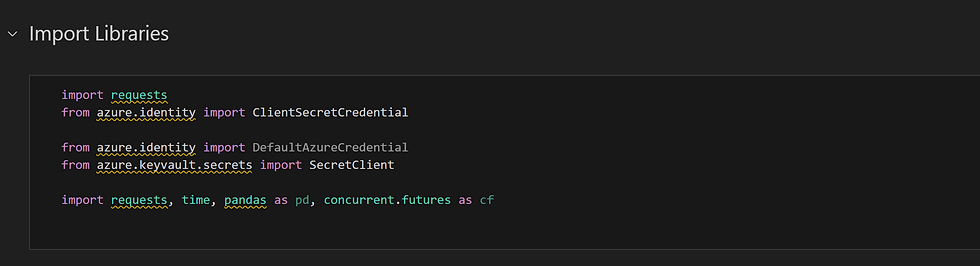

Step 1 – Import Required Libraries

As always, we begin by importing the necessary Python and Fabric libraries.

Step 2 – Set Required Variables

Next, we define configuration values such as Key Vault details and Archive Workspace ID.

Step 3 – Retrieve Service Principal Credentials from Key Vault

Just like in Part 1, we securely pull the Service Principal credentials from Azure Key Vault.

These credentials authenticate our API calls for both report and dataset deletions.

Note – User running this code need to have access to this Key Vault

Step 4 – Identify and Delete Reports from Archive Workspace

This is where the cleanup magic begins.

We first query the dim_reports table to find all reports that are present in the Archive Workspace beyond the defined retention period (e.g., 30 days)

Note: The retention logic can vary depending on business rules. If a user requests to restore a report during the retention window, you can easily restore it using the same notebook logic from Part 1 or do it manually if it’s just 1-2 reports.

Once the list of expired reports is ready, we iterate through it and delete each report using Fabric REST APIs, authenticated via the Service Principal.

This step ensures that reports past their retention date are automatically removed, freeing up workspace capacity.

After deleting these reports we update the DeletedOn column from the report_archive_history table to keep a record of when this archived table was deleted.

Step 5 – Identify and Delete Orphaned Datasets

Now that we’ve cleared old reports, it’s time to handle their underlying datasets.

We fetch all datasets that were previously linked to these archived (and now deleted) reports. Then, using metadata tables, we check whether these datasets are still connected to any other active reports.

Only datasets without active dependencies are selected for deletion.

We iterate through this filtered list and remove them using the same Fabric REST API approach, authenticated with our service principal.

This ensures that we don’t delete datasets that are still being used elsewhere — a safe and systematic cleanup.

Conclusion

With this step, our automated report archival and cleanup framework in Microsoft Fabric is complete.

By scheduling these notebooks to run weekly or monthly, organizations can:

✅ Keep workspaces clean and organized

✅ Prevent unnecessary dataset refreshes

✅ Reduce compute and storage costs

This version marks the first iteration of the complete solution — but it’s just the beginning. I’m already exploring enhancements such as:

Automated notifications to report owners before deletion

Stop dataset refresh when the report is archived (if it’s the only report connected to this dataset)

Code improvements and error handling.

If you have more ideas on how to make this framework even better, I’d love to hear from you!

Your perspective can help refine this solution and make it more valuable for everyone managing large Fabric environments.

Let’s keep improving how we govern, optimize, and scale Microsoft Fabric.

Reference Github Folder - Fabric/Automating Report Archival at main · ishan1510/Fabric